Projects

Kaggle-AI Model Prediction 2023/11

A competition that explores optimization objectives and methods for AI algorithms. In this competition, our aim was to train a machine learning model based on the runtime data provided to you in the training dataset and further predict the runtime of graphs and configurations in the test dataset. We used a Graph Convolutional Network (GCN) model to address this challenge. We also useadopted GCN convolution layers (GCNConv) to process the graph structure, facilitating the flow and integration of information between nodes. To further enhance the accuracy of predictions, we decided to employ model fusion on the aforementioned model. Our work achieved prediction and optimization of the operation of artificial intelligence models and algorithms. I received a Silver medal(4%) for this competition.

Competition AI Algorithm GitHub

Read More

Kaggle-LLM 2023/10

A competition of Kaggle that explore the potential of LLMs. We encoded the input using DeBERTa-v3-large and built a reading comprehension model using the AutoModelForMultipleChoice model from the Transformers library. When our model answers multiple-choice questions automatically, we first match the prompt and options with the original text and then use the model to predict the correct answer. We adopted a sentence vector approach by measuring the similarity between the prompt and the original Wikipedia texts to extract the most similar original text, which serves as the primary reference for the questions. Our model achieved a MAP@3 of 0.906 on the test set. Our work will effecively help researchers better understand the ability of LLMs to test themselves. I received a Bronze medal(7%) for this competition.

Competition LLM GitHub

Read More

CV-Hardware Co-designing for Steelworks 2023/09-Present

> Ported YOLOv5 model to FPGA using HLS Compiler and optimized the algorithms for hardware deployment scenarios. > Leveraged FPGA programmability to explore customized design for YOLOv5 in industrial settings. > Utilized a large dataset of images and videos from actual production to annotate the components of clay gun machines and trained the object detection model with YOLOv5. > Annotated feature sub-blocks on moving block images and fixed block images respectively, and trained the segmentation model for feature sub-blocks with YOLOv5.

Research CV Hardware FPGA Industrial application GitHub

Read More

COVID-19 Related Medical Text Summarization with BERT 2022/12-2023/01

> Developed a Chinese text encoding method based on BiLSTM and embedded it into the encoding layer. > Constructed innovatively an encoder comprising four components: a fine-tuned BERT-Embedding layer, a BiLSTM layer, a series of convolutional gates, and a self-attention mechanism layer. > Collected corpora independently from various medical-related Chinese publications and pre-trained the preprocessed data using BERT to enhance semantic representations in sentences. > Achieved automatic summarization for medical texts, significantly reducing the time cost of reading and material collection.

Project NLP BERT GitHub

Read More

Research and Application of Temporal Reasoning 2022/11-2023/10

> Studied the temporal misalignment of PLMs empirically at the lexical level, revealing that the tokens/words with salient lexical semantic change contribute much more to the misalignment problem than other tokens/words. > Proposed a temporal reasoning video-language pre-training framework with both video and language understanding and generation capabilities. > Introduced temporal reasoning pre-training tasks to generate temporal reasoning multi-modal representation through modeling fine-grained temporal moment information and capturing the temporal contextual relations between moment and event.

Research NLP Pre-trained language model Video-language model Multimode GitHub

Read More

COVID-19 ConsultantGPT Based on BERT-GPT 2022/11-2023/02

> Collected, annotated, used and improved a Chinese medical dialogue data set CovidDialog-Chinese, and used this data set to train a model based on BERT-GPT. > Utilized frameworks such as PyTorch and TensorFlow and two pre-trained language models, BERT and GPT. > Implemented a model that can have real-time conversations with patients, determine the patient's probability of being infected with COVID-19 based on the patient's specific symptoms and past behavior, and provide targeted medical advice.

Research GPT BERT NLP GitHub

Read More

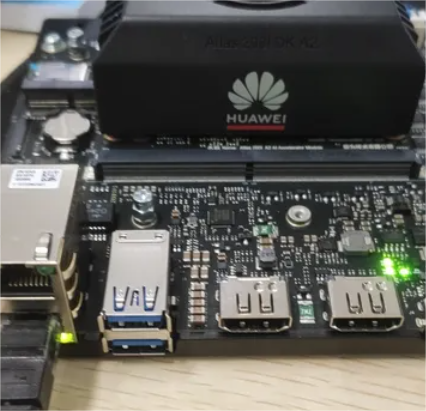

Monocular Depth Estimation on Ascend Development Board 2022/09-2023/01

> Led the team to carry out a monocular visual depth estimation project on the Atlas 200DK development board to realize the hardware implementation of computer vision. > Used ResNet as a model enhancement method to build a deep learning network to achieve monocular visual depth estimation, and converted the model into the development board, which was accepted by the development board provider (Huawei).

Project CV Hardware GitHub

Read More

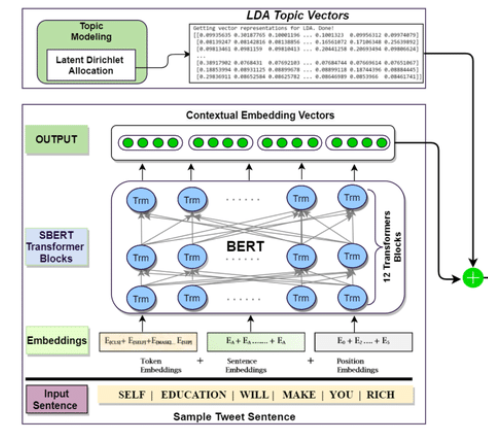

LDA-BERT for Aspect Level Sentimental Analysis 2022/09-2023/01

> Introduced a guided LDA model that leverages a small set of general seed words from each aspect category, integrating it with an automatic seed set expansion module based on BERT similarity for improved and quicker topic convergence. > Utilized specially-designed RE-based language rules to help better target the multi-word aspect. > Filtered the inputs guiding the LDA model using multiple pruning strategies to enhance semantic strength when co-occurrence statistics may not be used as a differentiating factor. > Applied particle swarm optimization strategy to adjust the threshold parameters of seed set expansion and RE-based input filter.

Research BERT LDA NLP GitHub

Read More

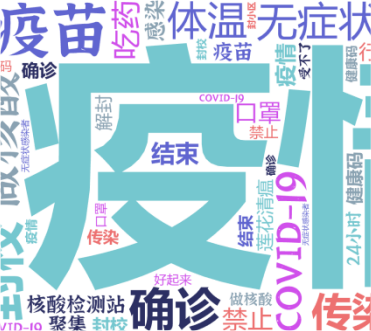

Deep Learning for NLP and Data Science 2022/07-2022/09

> Processed and labeled the acquired data, and applied machine learning and natural language processing technologies to conduct data analysis, obtaining results of practical significance for solving social problems and making effective suggestions. > Collected online public opinion data on COVID-19 on the Sina Weibo platform in the first half of 2022, processed and annotated the data according to the requirements of the BERT model, and fine-tuned the BERT model using the data. > Completed the task of public opinion analysis effectively through adding new modules to the model to improve accuracy.

Research NLP Data Science Deep Learning GitHub

Read More